February 25, 2025

How to Extract Web Data in 2025

Excel has a reputation for being a powerful tool for data collection and analysis. It also helps when you need to work with web data. Thus, you should know how to extract data from a web page.

However, this is easier said than done. Manually extracting the data into Excel is tedious, especially with repeated tasks. Hence, simpler methods are needed, which this article offers. These methods and the right tools make the task easier.

Read on to learn more about the different methods to employ when extracting web data into Excel. We’d also explore some challenges you may face.

Why Extract Web Data into Excel?

To lay a foundation, let us explore the importance of extracting web data into Excel:

- Web Data Analysis: Excel is a good tool for analysis, and its tools are useful for working with data. With Excel, you can sort, filter, and analyze the data.

- Visualization: Excel has many charts and graphs that allow us to visualize web data trends.

- Automation: Collecting data manually can be burdensome. Excel automates this process, making it more efficient.

- Real-time Updates: Through Excel, data can be easily updated in real-time.

- Efficiency: Excel makes us efficient in handling and integrating live data into reports.

- Data Integration: The web data extracted through Excel can be integrated with other datasets.

So, let’s examine the best web scraping techniques to extract web data into Excel:

Method 1: Using Excel’s Built-in “Get Data from Web” Feature

Excel has a “Get Data from Web” feature that simplifies the process. It is one of the easiest ways to extract web data into Excel, ideal for creating tables and reports.

Steps:

- Open Excel and go to the Data tab.

- Click on Get Data > From Other Sources > From Web.

- Enter the URL of the website containing the data you need and click OK.

- Excel will load the page in the Navigator window. Select the table or data structure you want.

- Click Load to import the data into Excel.

- Optionally, click Transform Data to use Power Query for modifications.

- Click Refresh periodically to update the data from the website.

Best for: Web pages with structured tables, such as Wikipedia pages, financial reports, and product lists.

Example: If you want to extract exchange rates from a currency conversion site, simply paste the URL into Excel’s Get Data from Web tool, select the table showing exchange rates, and import it directly into your sheet.

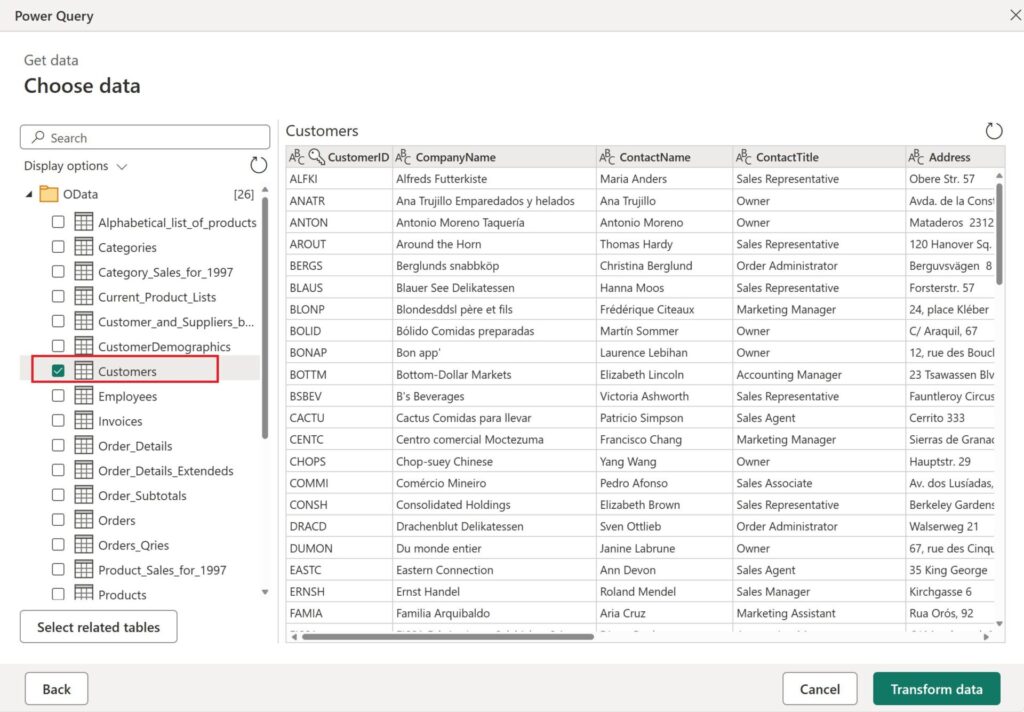

Method 2: Using Power Query for Advanced Data Extraction

Another effective method is Power Query, an advanced tool in Excel. Being more complex than the built-in feature above, Power Query offers the individual and business more control over the entire process.

Steps:

- Open Excel and go to Data > Get & Transform Data > Get Data > From Web.

- Enter the URL and click OK.

- Once the data is loaded in the Navigator, click Transform Data.

- Use Power Query to:

- Remove unwanted columns.

- Change data types.

- Apply filters and transformations.

- Click Close & Load to import the cleaned data into Excel.

- Click Refresh All to update data when needed.

Best for: Extracting and cleaning data from semi-structured web pages.

Example: If you’re tracking stock market trends, Power Query lets you clean up irrelevant columns and keep only the data you need, such as stock prices and trading volumes.

Method 3: Using Web Scraping with VBA (For Dynamic Websites)

Excel’s built-in tools are valuable yet limited, meaning they cannot extract certain types of web data. For example, some web pages rely on JavaScript to load content, making Excel’s tools insufficient.

A better tool to use is the VBA (Visual Basic for Applications). Here’s how it works:

Steps:

- Open Excel and press ALT + F11 to open the VBA editor.

- Click Insert > Module.

- Copy and paste the following VBA script:

Sub GetWebData()

Dim driver As New Selenium.WebDriver

Dim table As Object

Dim data As String

driver.Start "chrome", "<https://example.com>"

driver.Get "/"

Application.Wait Now + TimeValue("00:00:03")

Set table = driver.FindElementByTag("table")

data = table.Text

ActiveSheet.Range("A1").Value = data

driver.Quit

End Sub

- Modify the script to match the website structure you are scraping.

- Run the script to extract the data into Excel.

Best for: Extracting data from JavaScript-heavy or interactive websites.

Example: A VBA scraper can pull the latest values at set intervals if a stock website loads live prices dynamically using JavaScript.

Method 4: Using Third-Party Web Scraping Tools

Third-party tools are important when working with complex web data and when the extraction process itself is complex. These tools include ParseHub, Import.io, and Octoparse.

Here are the steps to follow.

Steps:

- Download and install a web scraping tool (e.g., Octoparse).

- Open the tool and enter the website URL.

- Select the data fields you want to extract.

- Set up the extraction rules.

- Export the data to Excel (.xlsx or .csv format).

Best for: Large-scale web scraping or sites with anti-scraping measures.

Example: If you’re collecting product prices from multiple e-commerce sites, a third-party tool can automate the process without coding.

Top Tools for Extracting Data

To implement the above-mentioned methods, there are some important tools you can use for extracting, including:

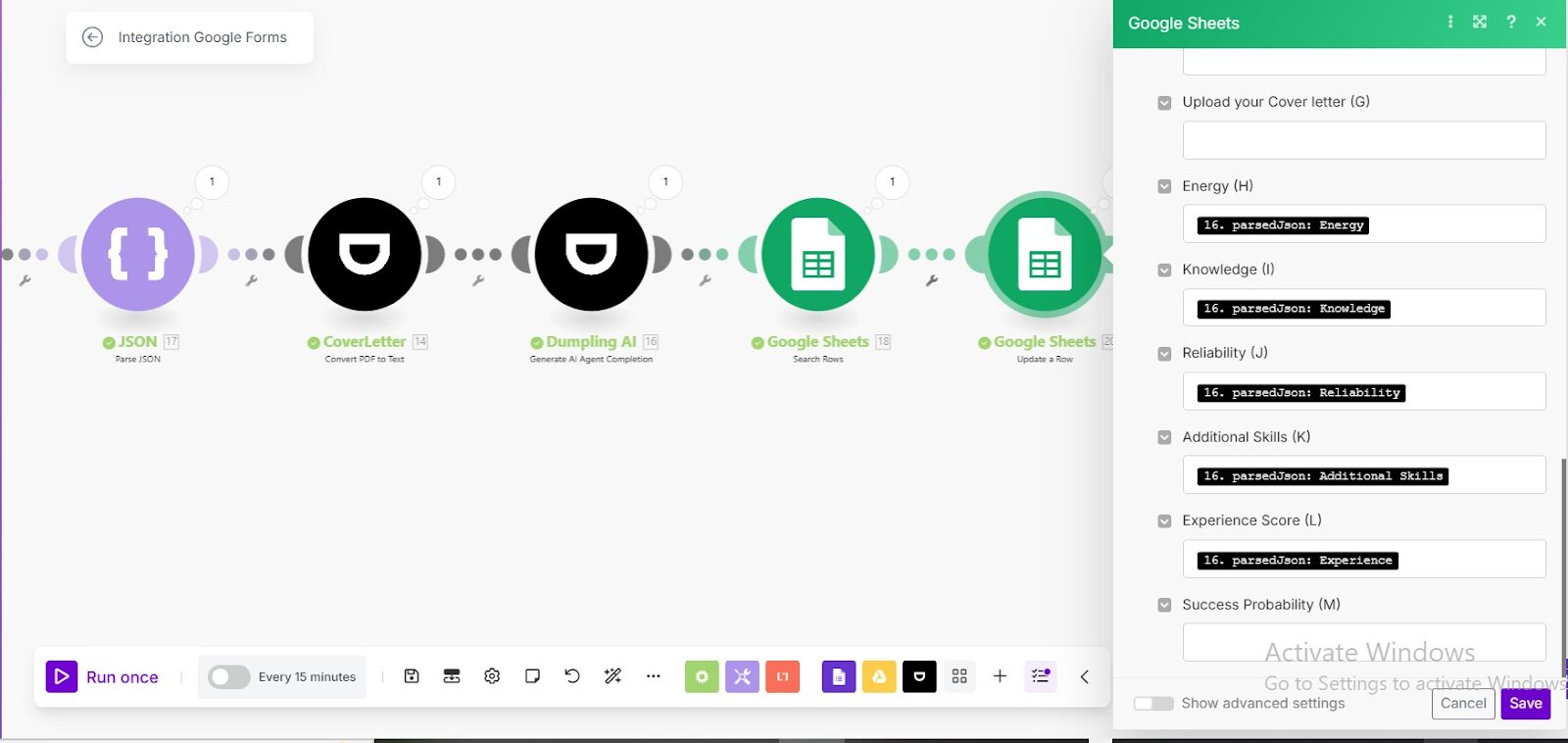

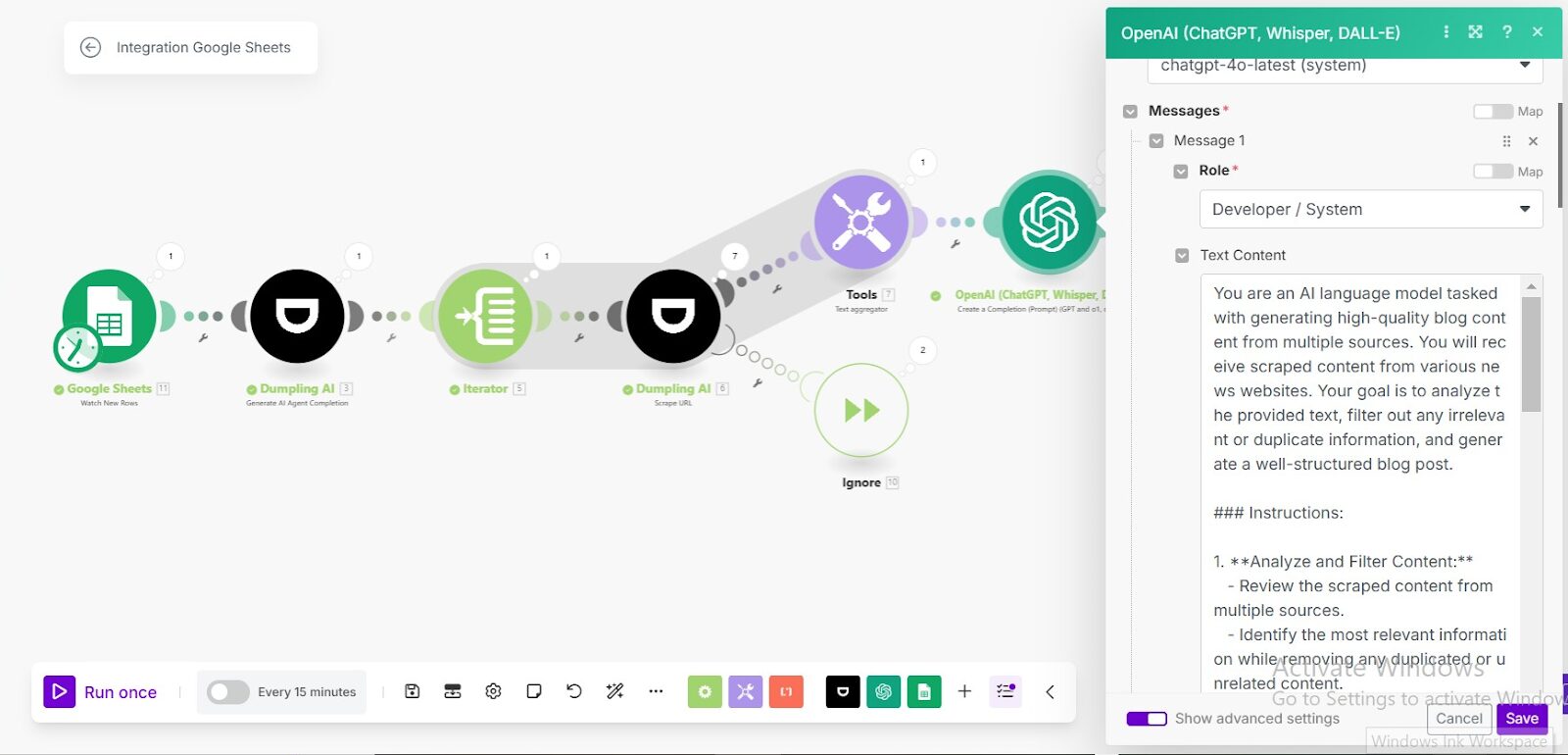

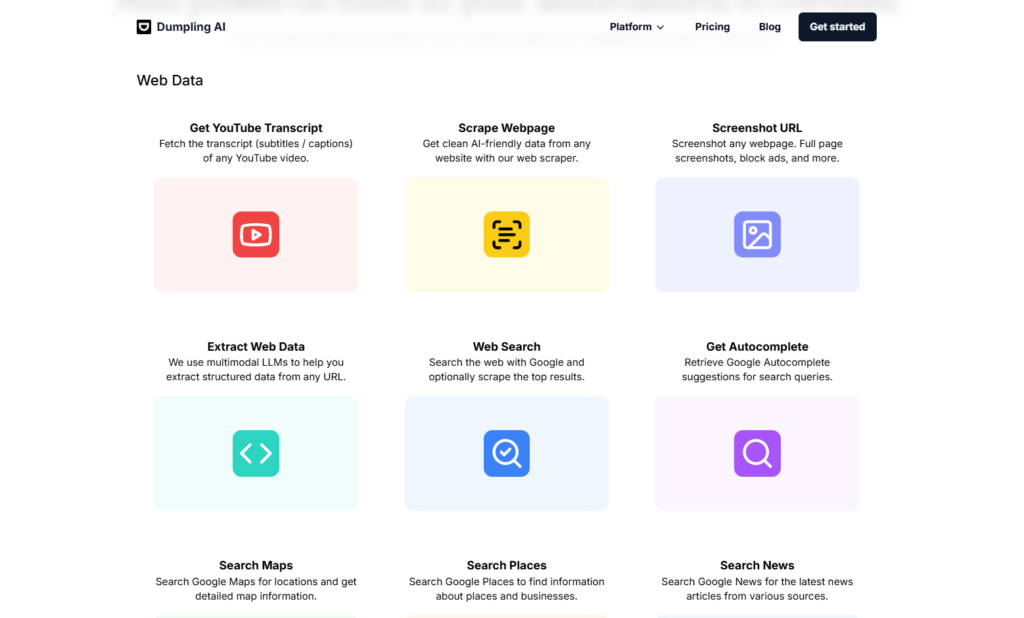

1. Dumpling.AI

Dumpling.AI offers numerous tools for businesses, including various data extraction methods. One of these is the web data extraction tool, which use multimodal LLMs to help you extract structured data from any URL.

Dumpling makes data analysis better, makes workflow more efficient, and automates data collection.

Data Type

- Structured text

- Tables and spreadsheets

- Natural language data processed by LLMs

Function

- AI-driven web scraping

- Data extraction and automation within Excel

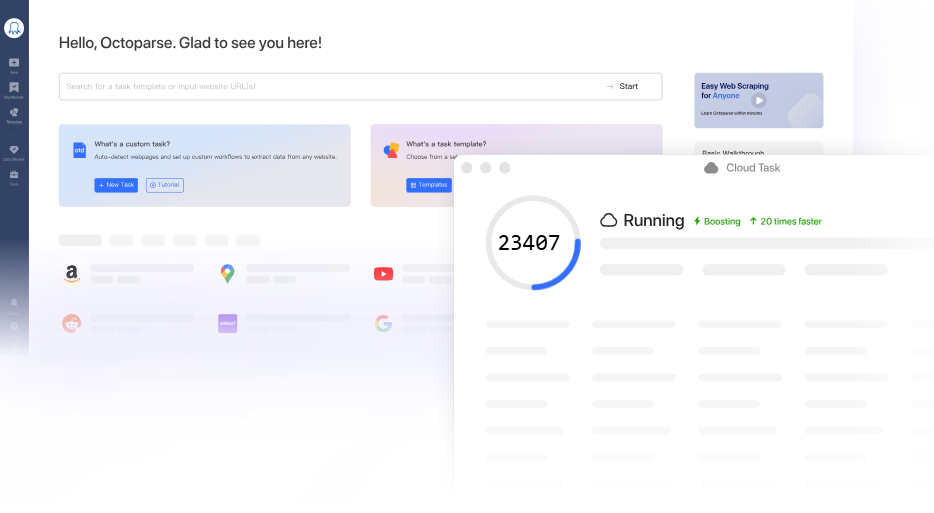

2. Octoparse

Octoparse is unique because the data it extracts goes directly to Excel. Additionally, Octoparse doesn’t require coding skills and is used to obtain structured data.

Data Type

- Product listings

- News articles

- Business directories

Function

- No-code web scraping

- Cloud-based data extraction

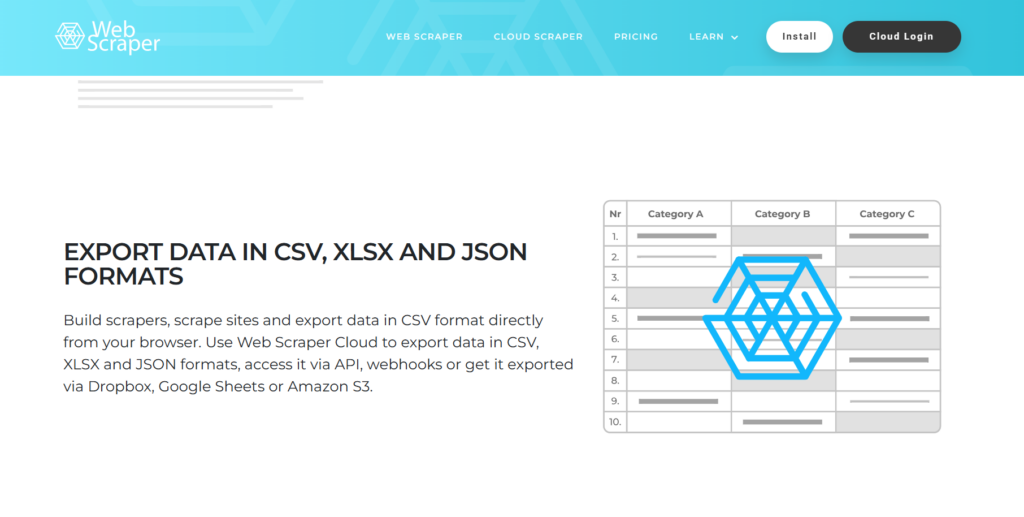

3. Web Scraper

Unlike Octoparse, Web Scraper doesn’t extract data directly from Excel. Instead, the Chrome extension saves the data as a CSV file that Excel can access. Web Scraper is for simpler data.

Data Type

- HTML page content

- Lists and tables

- Metadata

Function

- Browser-based web scraping

- Automated data extraction with custom rules

4. Power Query

Power Query is a sophisticated Excel tool by microsoft that offers a range of functions, including extracting data from the web. It enables users to clean, reshape, and analyze data.

Data Type

- CSV and Excel files

- Databases

- Web data

Function

- Data transformation and automation

- Integration with various data sources

5. Puppeteer

Puppeteer is a Node.js library that extracts data from JavaScript-heavy websites for Excel. This tool has many useful features, including headless browsing, which helps extract web data.

Data Type

- Dynamic web content

- Screenshots and PDFs

- Interactive website elements

Function

- Headless browser automation

- Scraping JavaScript-rendered content

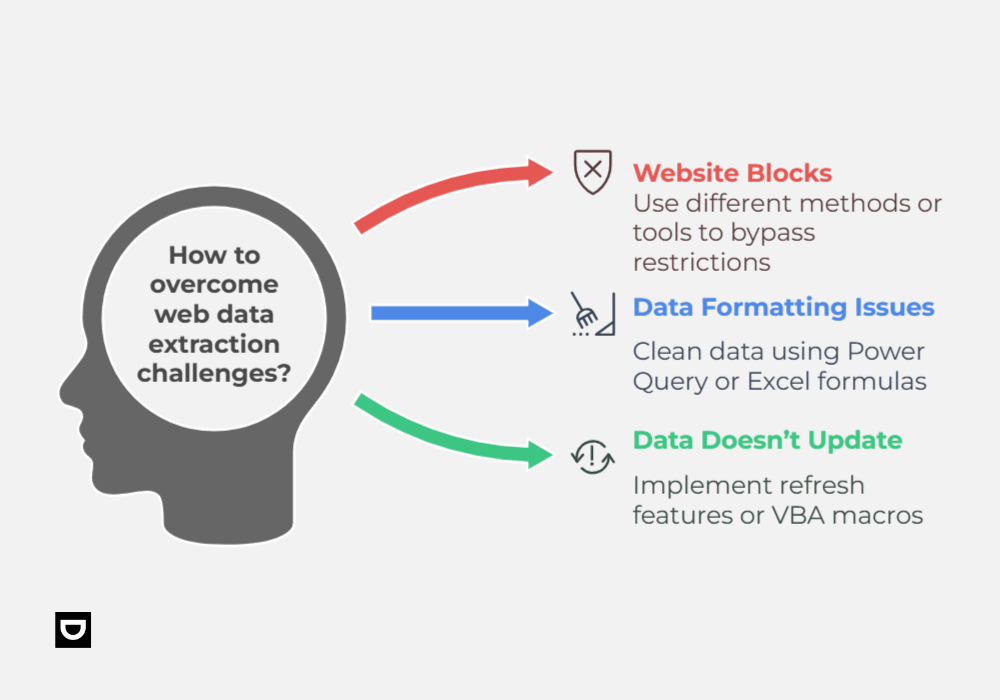

Common Challenges and Solutions

The four latter methods above are sufficient to extract web data into Excel. However, they don’t come without challenges.

Here are 3 of them:

1. Website Blocks Data Extraction

For numerous reasons, websites can stop you from scraping data. As solutions, we should try:

- Using a different method (Power Query instead of VBA).

- Changing user agents in the VBA script.

- Using paid scraping tools with proxy support.

2. Data Formatting Issues

Sometimes, there are inconsistencies and inaccuracies in the web data, which can hinder analysis. Possible solutions are to:

- Use Power Query to clean up messy data.

- Use Excel formulas like TEXT() or TRIM() to fix formatting.

3. Data Doesn’t Update Automatically

Another challenge faced is when data doesn’t automatically update. Here are some solutions:

- Use the Refresh feature in Power Query.

- Set up VBA macros for automatic updates.

FAQs

Is It Legal To Scrape Data From a Website?

Legality depends on the website’s Terms of Service. Publicly available data is usually fine, but always check the site’s robots.txt file or seek permission. You can never be too sure.

What’s the Best Method for Real-Time Data Updates?

The best approach is using Power Query with scheduled refresh or setting up VBA scripts with timers.

Can I Scrape Data From Social Media Platforms Into Excel?

Most social media sites have strict policies. Instead of scraping, use official APIs (e.g., the Twitter API).

What if the Website Structure Changes?

Web scraping tools rely on HTML structure. You may need to adjust your script or queries if the website updates.

How Can I Extract Images From a Website Into Excel??

Utilize VBA or third-party tools to download images and embed them in Excel.

Conclusion

Extracting web data into Excel can save time and enhance data analysis. Whether you’re using built-in tools, Power Query, VBA, or third-party software, there’s a method for every use case. Experiment with these techniques and select the one that best suits your needs.

By following these steps, you’ll be able to extract web data like a pro!