March 9, 2025

LLM Agents Explained: How AI Generates Human-Like Responses

Artificial intelligence has always existed, with the likes of Siri, Alexa, and even Grammarly. However, the noise over artificial intelligence has increased, hasn’t it? It seems like almost everyone is talking about it, from informal conversations to serious seminars.

LLM agents are largely responsible for this newfound interest in artificial intelligence. As ChatGPT, Bard, and others made their debut, everyone could sense a change. These tools came prepared to revolutionize everything, including how we research and communicate.

What makes LLM agents special is how they produce texts that sound human. You almost feel like you’re learning with a friend or working with your personal assistant. Their functionality goes from simple tasks to more complex programming, making them useful to a wide range of people.

But how do these agents generate human-like responses? We’ll respond to this question and more in the following section.

What Are LLM Agents?

LLM agents are AI systems trained to imitate human conversations and behavior through text. The word “agent” here means that these systems work on their own to satisfy user needs. LLM agents are trained using different tools such as,

- Learning patterns

- Textual data

- Language nuances

- Structures

Dumpling AI offers a dataset for this training. We provide them from different sources to power AI systems, with the aim that these systems generate accurate responses to prompts.

These agents can either operate independently or integrate into an existing app to perform their functions. They can be your assistant, friend, tutor, critic, analyst, colleague, and more.

What Is the Core Architecture Behind LLMs?

In 2017, eight Google scientists released what became landmark research. The paper, “Attention Is All You Need” by Vaswani et al., introduced a deep learning architecture known as the transformer model, which became an important part of natural language processing.

The transformer model is the LLMs agent architecture. Unlike earlier models, it can handle long-range dependencies in text, making it generate more human-like responses.

As the research’s title suggests, the transformer model works with “attention,” which prioritizes relevant parts of data.

The transformer model can be divided into two components:

- The encoder analyzes the prompt (input text) from the user and formulates it in a language the model understands.

- The decoder then uses the information to generate a text, the output.

The transformer model is used more in the decoder phase of LLMs.

Examples of Tools and Frameworks Used to Build LLM

There are some tools and frameworks specially designed to help use efficient LLM agents. They include:

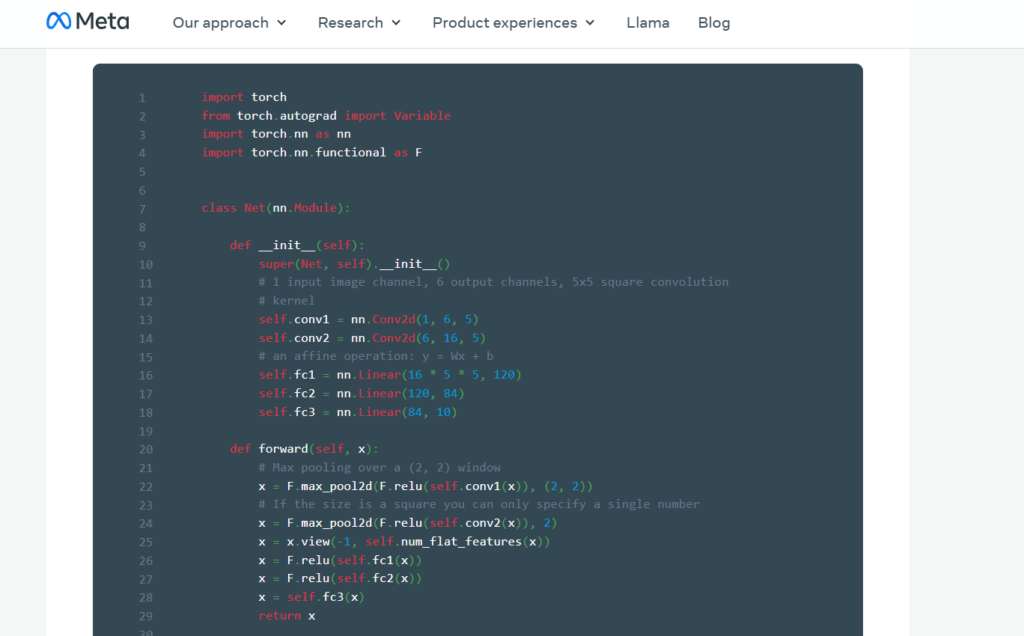

1. PyTorch (Developed by Meta)

Meta’s Pytorch is a popular framework for building and training LLM agents. Some of your favorite AI models, like Chat GPT, receive training on PyTorch.

Pytorch has a lot of strengths, including its dynamic computation graph. This graph gives developers an easy path to modify and debug AI models. Its easy integration with Python is another bonus for developers.

Major companies like Tesla, Microsoft, and Open AI use Pytorch.

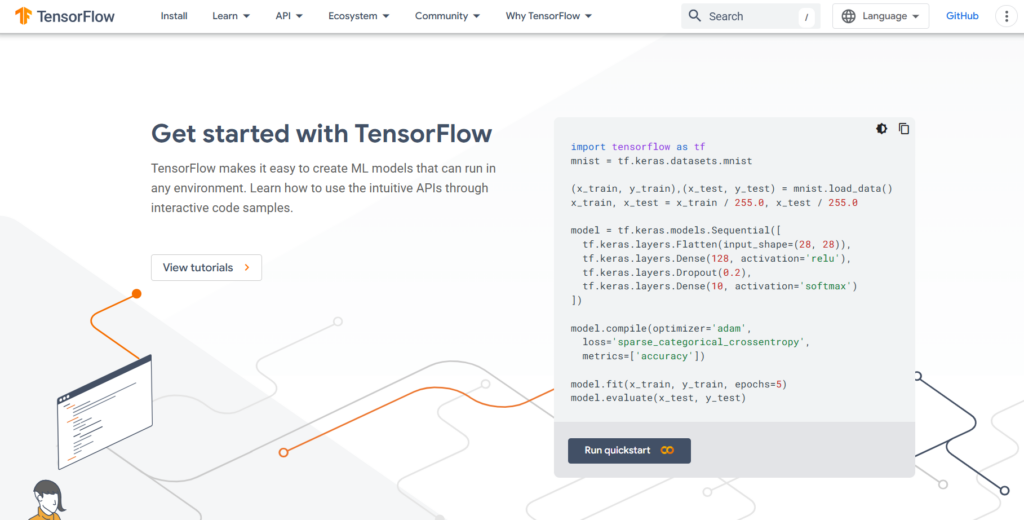

2. TensorFlow (Developed by Google)

TensorFlow is responsible for many Google AI models, such as Google Assistant, Google Translate, and Google Photos. Like Pytorch, it allows developers to build and train LLM models.

That said, TensorFlow works with a static computational graph, which is different from Pytorch. While the static graph doesn’t allow for flexible changes, it can make building faster as you define the structure before starting.

TensorFlow is a perfect fit for large-scale machine learning, which is one of its greatest strengths. It also can run a model on different devices, from phones to GPUs. You’d find it in different industries, including healthcare and finances.

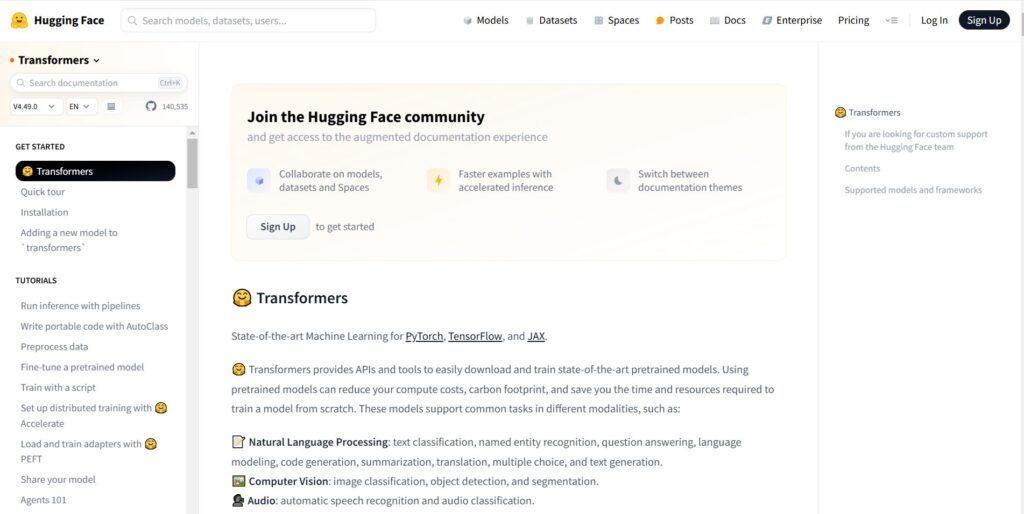

3. Hugging Face Transformers

Hugging Face can partner with both PyTorch and TensorFlow, allowing for different AI workflows. Its language models are pre-trained, which is a good option for developers who would prefer not to build from scratch. It is perfect for beginners.

In addition, Hugging Face has some tools that generate text, summarize content, translate, and manage other tasks. Hugging Face is used in other AI-powered tools, such as search engines.

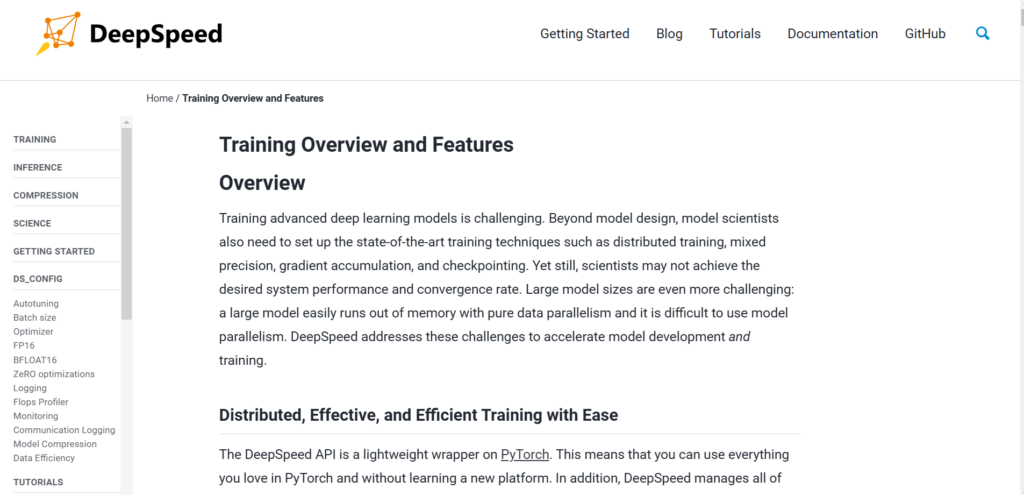

4. DeepSpeed (Developed by Microsoft)

DeepSpeed is a good framework for large-scale AI models at lower costs than other alternatives. Its optimization tools reduce the costly requirements needed to train these large models. It also separates the training process across multiple Graphics Processing Units (GPUs).

DeepSpeed’s ability to train large models remains one of its greatest strengths. Microsoft has used this platform to run AI tools like Azure AI.

5. Ray

Our final example is Ray, which specifically trains AI models to handle multiple tasks at once. Companies like Uber, OpenAI, and Netflix use this tool, and for good reasons. Its multi-tasking skill helps AI to operate smoothly in an application. In addition, Ray does reinforcement training, where AI models improve as they interact with their environment.

From Data to Knowledge: How to Train LLMs

Humans gain knowledge and grow through training. The same applies to LLM agents. For them to function properly, the model is trained on various data, such as the kind Dumpling.ai provides. These include websites, books, social media posts, etc.

This training aims to help the LLM recognize language patterns and sentence structures. It also teaches the LLM to predict words in a sentence and detect subtle cues like humor, idioms, and sarcasm. The training is focused more on general principles of language than actually generating text.

You need numerous resources and time to train an LLM, ranging from a few weeks to months.

How LLMs Create Human-Like Responses

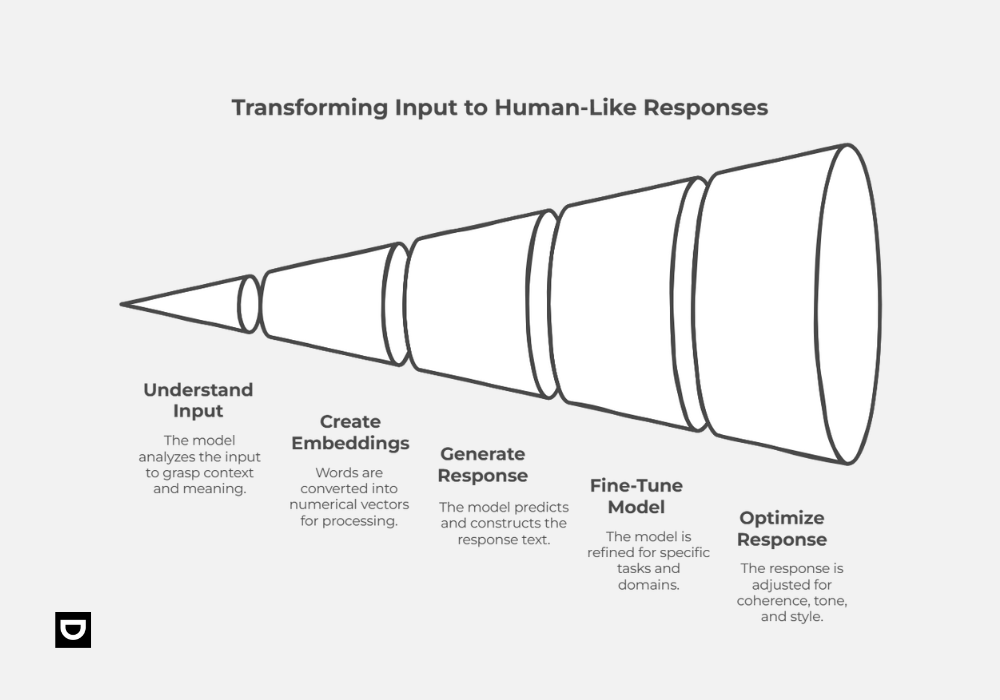

The trained LLM agent showcases the results of its training by generating text when the user provides a prompt. These models adhere to specific steps to ensure their responses sound human, including:

1. Understanding the Input: Contextualization

Like a student writing a test, the LLM agent must first understand the user’s input. What does the user want? The model analyzes each token by breaking down the sentence. This analysis helps it grasp context, the meaning behind the words, and syntax.

After breaking down the sentence into tokens, the model processes these tokens using the transformer architecture, considering their relationships to each other.

As an example, take the sentence, “Write a cover letter”. LLM agents check the words, structure of the sentence, context, and any previous conversations that might have been related to a job opportunity.

2. Contextual Representation

After understanding the input, LLM agents work with embeddings to determine a word’s meaning and relationship to other words. Embeddings are vector representations of words, phrases, and entire texts. They are numbers used to represent words in a way that captures meaning.

So, the model processes the input, changes it into a set of numbers, and then passes it through the transformer model.

3. Generating the Response: Autoregression

We come to the generation process itself, which LLMs achieve using autoregression. The latter predicts future behavior based on past data and works with that.

For example, the user might ask the model to “narrate the history of Egypt.” The model generates a token, “Egypt,” and then produces the next predicted token based on its training data. The following tokens could be “has,” then “a,” then “vast,” “history”, and so on.

4. Fine-Tuning and Specialization

During the fine-tuning phase, the model undergoes more learning to refine it. Fine-tuning is necessary for your LLM agent to carry out specific tasks. It helps the model specialize by feeding it data from a particular field, such as medicine or technology.

Fine-tuning helps the model handle queries in a particular field and gives even better, humanlike responses.

5. Response Optimization and Human-Like Behavior

In addition to producing texts that mimic human writing, LLM encompasses various aspects of human communication, significantly enhancing their effectiveness. Here are some of these aspects:

- Incorporating Real-World Knowledge: Thanks to their training data, LLM agents can incorporate real-world knowledge into their responses like a smart friend would. This lends credibility to their generated responses and helps them engage in discussions.

- Tone and Style: Through training, these agents can also be flexible with their tone and style, depending on what the user wants. They can add humor, be professional, take a casual tone, and even use slang.

- Coherence and Consistency: One of the most “human” aspects of many LLMs is their memory. By remembering and referring to past conversations, they engage users in a real discussion. This also creates continuity and helps them give tailored responses.

6. Ethical Considerations: The Limits of Human-Like Responses

LLM agents are not humans, nor are they conscious beings. This might seem obvious, but their expertise in generating humanlike responses can sometimes blur the line. However, we’ve established that humanlike behavior is simply pattern recognition.

Consequently, as we examine the LLM agents framework for generating articles, we must also consider ethical concerns. LLM agents can provide incorrect and biased information. They are also vulnerable to malicious use, like impersonating an individual.

Applications of LLM Agents

The ability of LLM agents to generate human-like responses has led to their widespread adoption in various fields. Some common applications include:

- Customer Service: Businesses use chatbots powered by LLM agents to automate customer support, answering queries and solving problems without human intervention.

- Content Creation: Writers, journalists, and marketers use LLMs to generate articles, blog posts, and marketing copy at scale.

- Code Generation: Tools like GitHub Copilot use LLMs to assist developers by generating code snippets and offering suggestions.

- Personal Assistants: Virtual assistants like Siri and Google Assistant use LLM technology to perform tasks such as setting reminders, answering questions, and more.

- Mental Health Support: Some organizations use LLM-powered chatbots to provide initial mental health support or therapeutic conversations.

Conclusion

LLM agents have changed the way we engage with AI, offering human-like responses that are becoming increasingly indistinguishable from those of actual people.

LLMs can understand context, generate relevant text, and simulate meaningful conversations by leveraging the power of transformer models, attention mechanisms, and vast datasets.

However, their limitations and potential ethical concerns remind us that while these models may appear human-like, they remain complex tools with challenges.

As we continue to refine these technologies, LLM agents will undoubtedly play an even larger role in transforming industries and enhancing our digital experiences.